Introduction to Part 3

This is the third and final part of our blog series “From Script to Data”, which shows how to use the Ontology for Media Creation to improve communication and automation in the production process. Part 1 went from the script to a set of narrative elements, and Part 2 moved from narrative elements to production elements. Here we will use OMC to go from a set of production elements into the world of filming, slates, shots, and sequences.

Combining Narrative Elements and Production Elements

Toe bone connected to the foot bone

Foot bone connected to the heel bone

Heel bone connected to the ankle bone

…

Back bone connected to the shoulder bone

Shoulder bone connected to the neck bone

Neck bone connected to the head bone

Hear the word of the Lord.

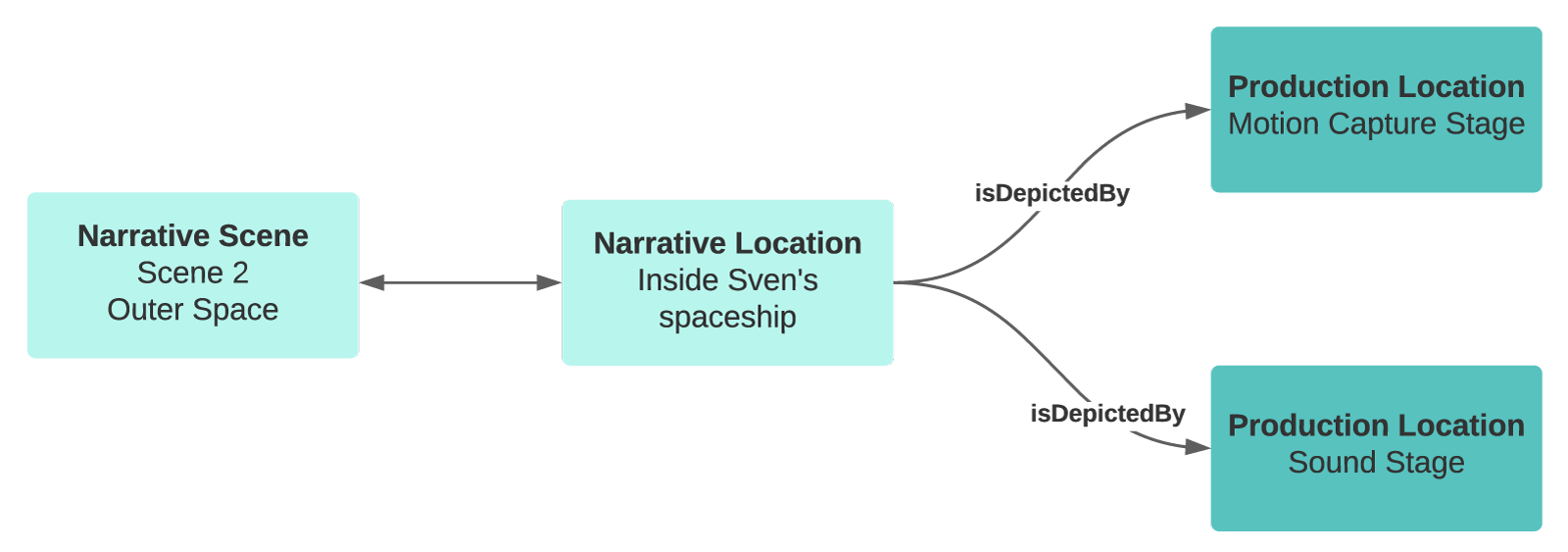

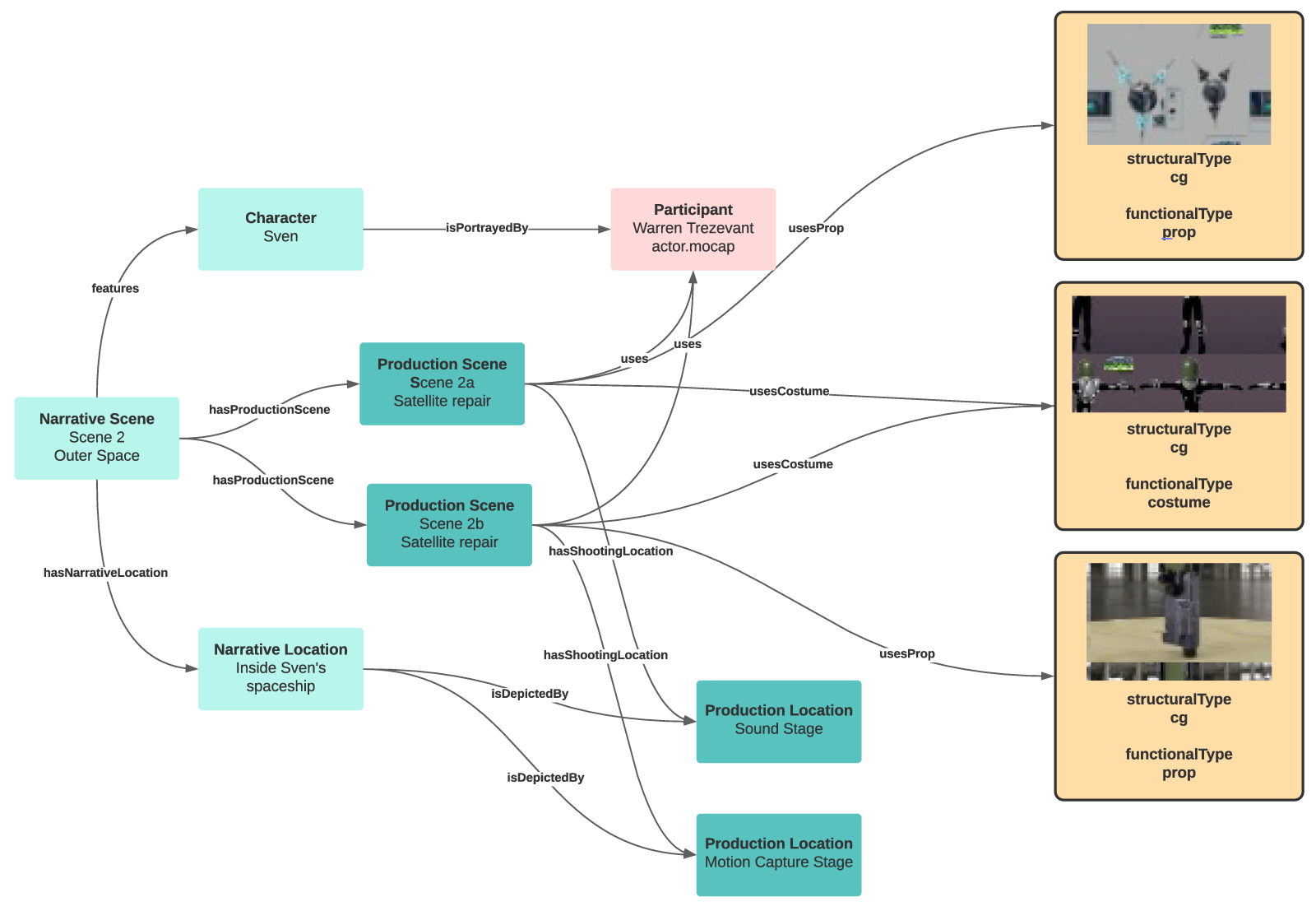

Even though we have extracted and mapped many of the narrative and production elements, there’s still something missing before filming starts: where is filming going to happen? Just as Narrative Props, Wardrobe, and Characters are depicted by production elements, Narrative Locations have to be depicted as well. The Ontology defines Production Location for this.

Production Location: A real place that is used to depict the Narrative Location or used for creating the Creative Work.

We’ll use two Production Locations. Production Scene 2A uses a stage for filming Sven to be overlaid on the VFX/CG rendering of the satellite, and Production Scene 2B is a different stage with a built set of the inside of Sven’s spaceship. (Don’t worry – the jungle scenes will be on location in Hawaii…)

This shows how Narrative Locations are depicted by Production Locations. The depiction may need just the Production Location, but it can also require sets and set dressing at the Production Location.

A production team can do lots of things with the information behind this representation. Many of these are done manually today but can be automated because OMC is well defined and machine-readable. For example, now we have the data standardized all production applications can generate, share and edit the data enabling users to:

- For some things (e.g., a Character that appears in more than one scene) readers have to decide whether to add multiple rows (as above), risking copy and paste mistakes and making the spreadsheet longer; add multiple columns, e.g., a single row for a character with a column for each scene, which requires rejigging things when someone appears in a new scene; or having a single row per character with all the Narrative Scenes in it with some kind of separator, which requires agreeing on the separator and editing lists when something changes. All of this is do-able in a small production but adds risk, overhead and chances of discrepancy in a large one.

- Find production scenes that have the same Production Location and arrange shoot days to take that into account, including which actors need to be present.

- Based on the shoot day for a Production Scene, find the Production Props and Costumes needed for it, and schedule them (and any precursors) accordingly.

- Automatically track changes in the script and propagate through the production pipeline.

- Change a character name – or anything else such as actor, prop, or location – in one place and have it propagate through the rest of the system: Sven can reliably become Hjalmar, for example.

Time to Film

Dem bones, dem bones gonna walk around.

Dem bones, dem bones gonna walk around.

Dem bones, dem bones gonna dance around.

Now hear the word of the Lord.

The diagram above is, of course, incomplete, but OMC supports the missing items, including other Participants (the director, cinematographer, camera crew, and so on) and Assets (set dressing, for example) and, as mentioned above, can express infrastructure components as well.

Including that level of detail would make this even longer than it already is, so we’ll imagine that it’s all in place – so at last, it is time to film something. In order to do this, we have to introduce two new concepts. Both are very complex, but here we will stick to just basic information and things related to connectivity.

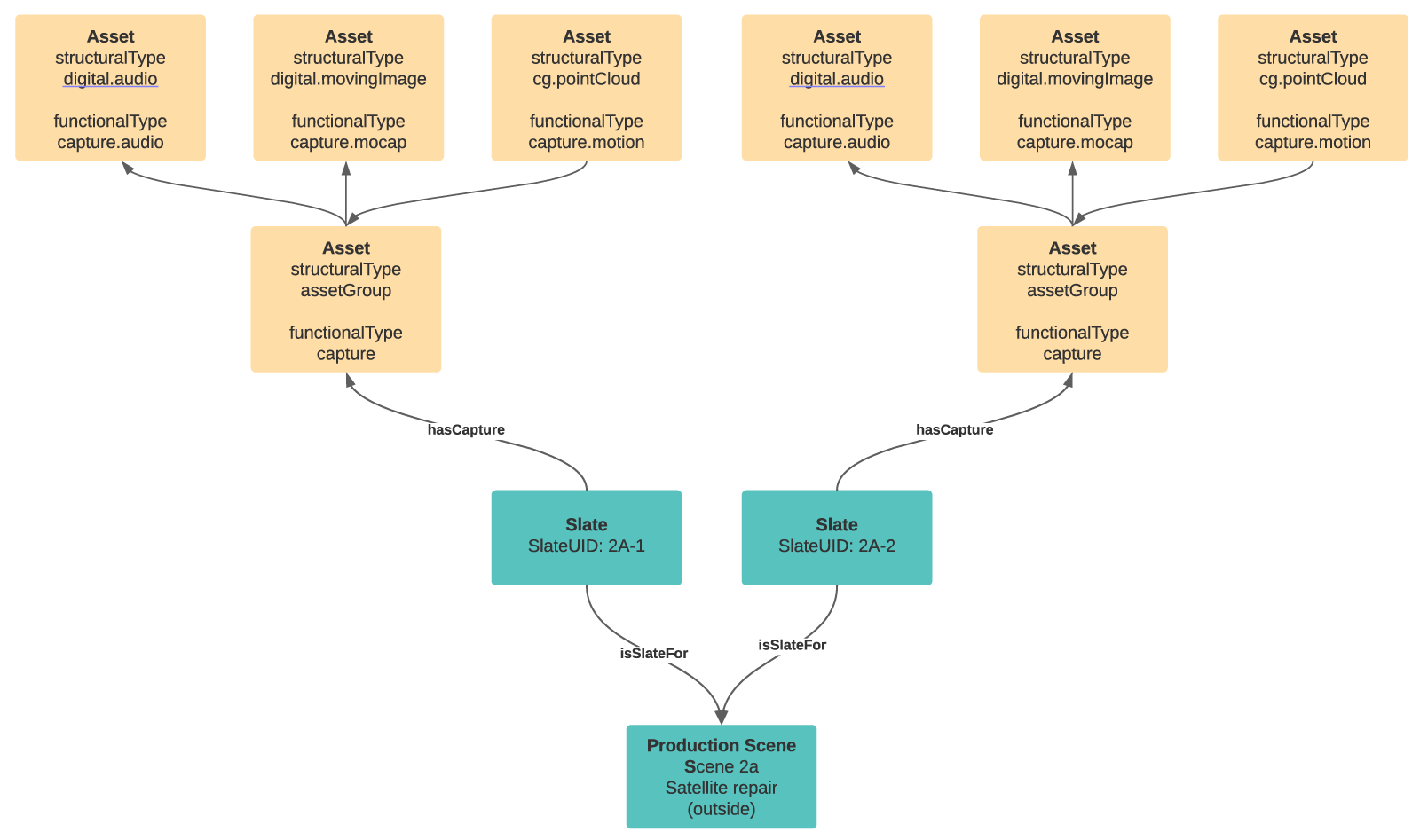

The first of these is: what to call the recording of the acted out scene? ‘Footage’ was used when it was done on film measured in feet and inches. ‘Recording’ is more often used for just sound. OMC uses ‘Capture’, which covers audio, video, motion capture, and whatever else may show up one day:

Capture: The result of recording any event by any means

The second is: How is the capture connected back to a to the Production Scene? In any production there’s a lot of other information needed as well: the camera used, the take number (since almost nothing is right the first time), and so on. Traditionally, this was written on a slate or clapperboard which was recorded at the start the Capture, which made it easy to find and hard to lose. This term is still used in modern productions, even if there is no physical writing surface involved. OMC makes it hard to lose this information – this digital slate with its information is connected to the capture with a relationship – and makes it easy for software to access and use. (Some current systems support extracting information either by a person or with image processing software from a slate in a video capture and saving it elsewhere, but this is not ideal.)

Slate: Used to capture key identifying information about what is being recorded on any given setup and take.

The Slate has a great deal of information in it, for which see the section in OMC: Part 2 Context. For now, we’ll focus just use the Slate Unique Identifier (UID) which is a semi-standard way of specifying important information in a single string; the Production Scene, which can be extracted from a standard Slate UID; and the Take, the counter of how many times this scene has been captured.

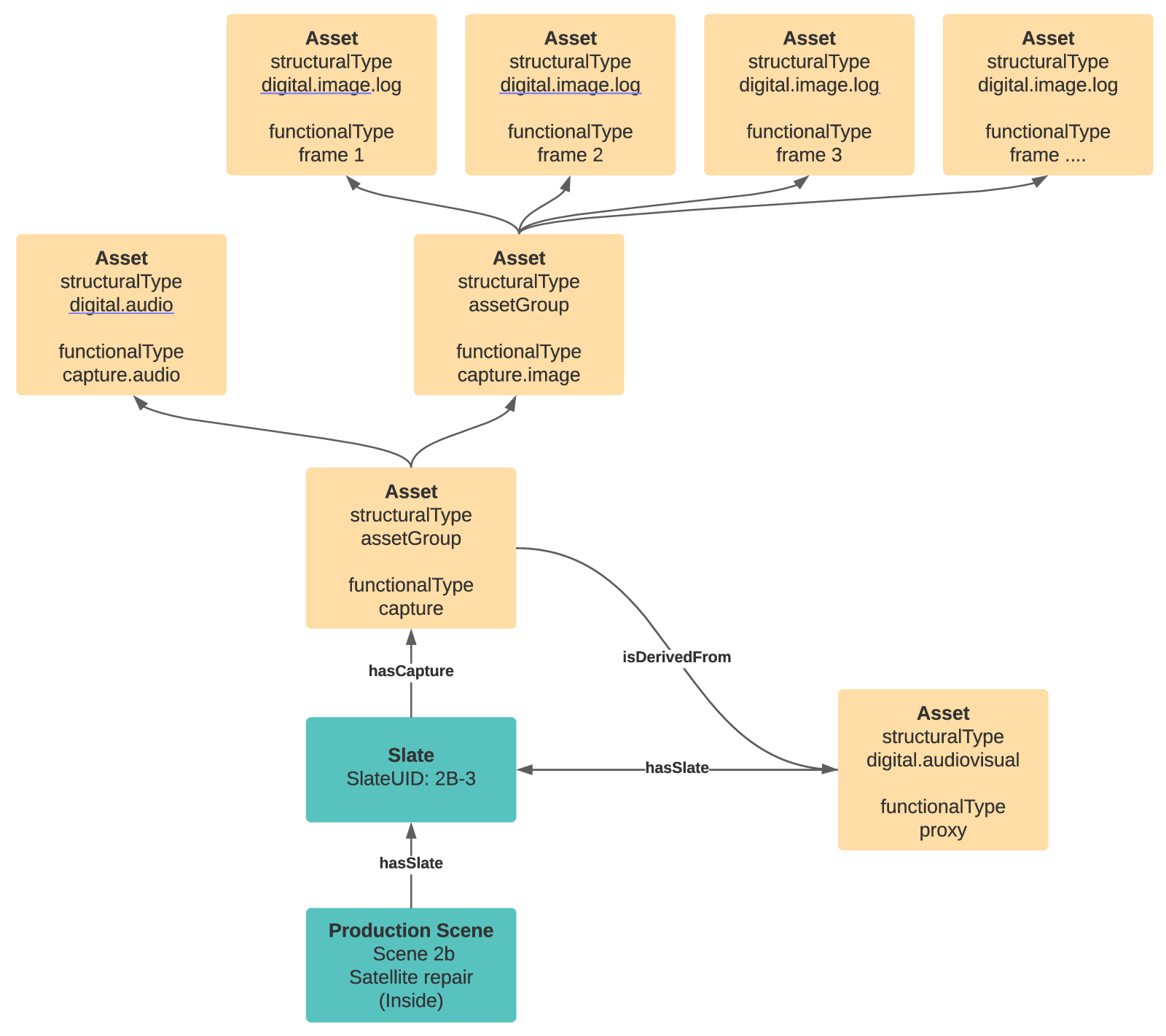

When a production scene is captured, all that is necessary is to create a Slate, connect it to the Production Scene, add the Take and Slate UID, and then record the action. This is repeated for each take, and for each camera angle or camera unit (if it’s being filmed by more than one camera) or type of capture (such as motion capture.).

The actual Captured media is just another kind of Asset which should be linked with the Slate ID. It may not be convenient to use natively e.g. if it is an OCF) and may require some sort of processing. The process of generating proxies and other video files derived from the OCF is a topic for another day, but it essentially deals with transforming Assets from one structural form into another, while still maintaining their relationships back to the production scene, and hence the production elements in them and the underlying narrative elements.

As long as the Slate ID continues to be associated with the all the media captured on-set it can link all of the OCF’s, proxies and audio files even as they head off into different post-production processes. These processes can be done by different internal groups or external vendors with their own media storage systems, but at long as the Asset identifiers and connections to a Slate are retained it doesn’t matter how things are stored. In the MovieLabs 2030 Vision, Assets don’t have to move, but in the short and intermediate term they often need to. Identifiers and the Slate remove many of the difficulties with this, for example by allowing the use of unstructured object storage rather than hierarchical directories, which are often application-specific. (See our blog on using an asset resolver for more information.)

This diagram shows two takes and their Captures for Production Scene 2A. Each take (represented by the Slate) generates three captures: an audio file, a video file, and a point cloud.

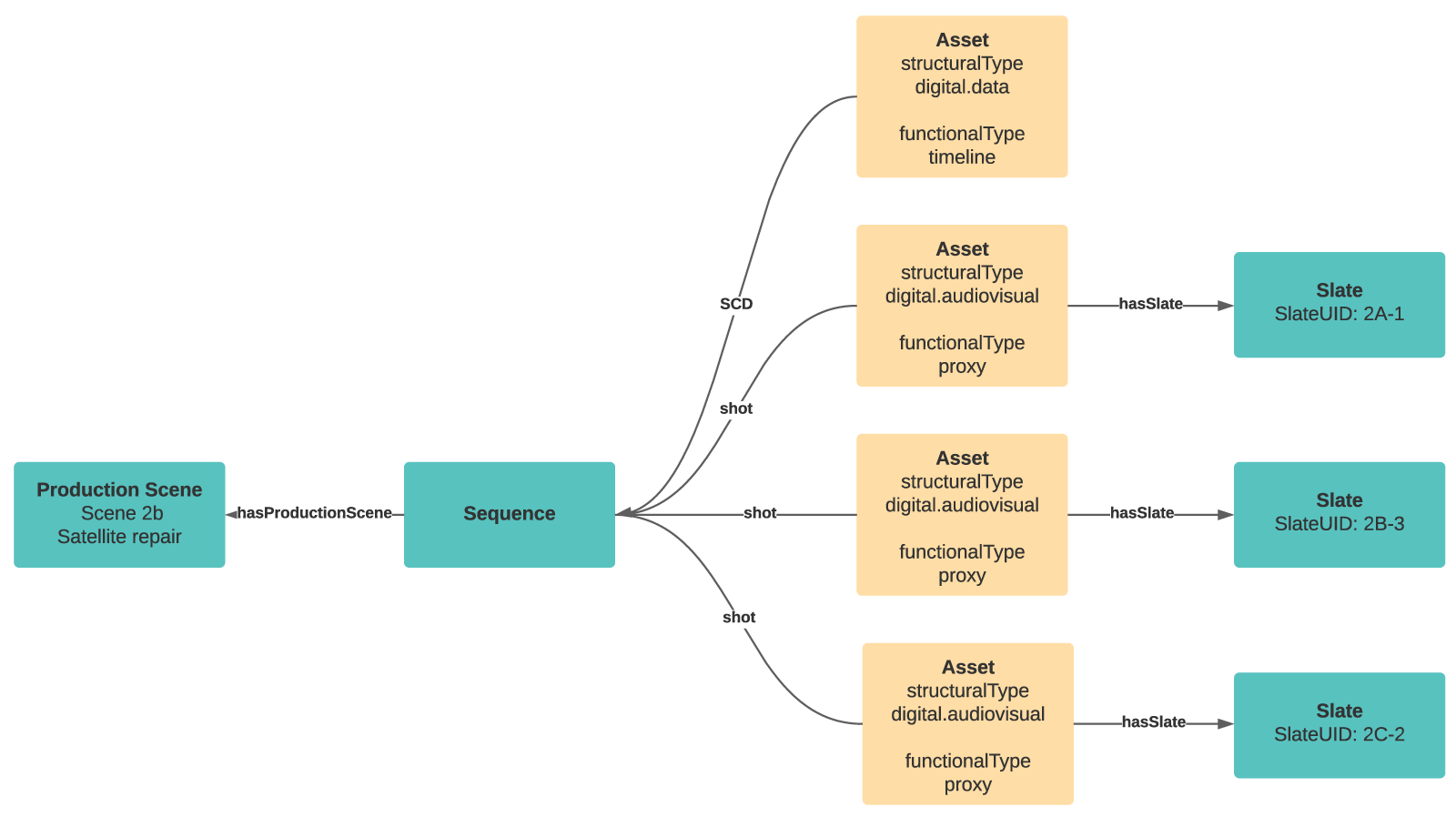

Sequence: An ordered collection of media used to organize units of work.

OMC calls the media used to create this sequence ‘shots.’ ‘Shot’ is used to mean many different things in the production process; for a discussion of some of these, see the section on Shot in OMC: Part 2 Context. The definition of Shot used here is:

Shot: A discrete unit of visual narrative with a specified beginning and end.

A sequence is just a combination of Shots presented in a particular order with specific timing, and in live action film-making a Shot is most often a portion of a Capture – ‘a portion’ because the creative team may decide to use only some of a particular capture. Storyboards can also be used as Shots, for instance, as can other Sequences. This means that a Shot has a reference to its source material.

Finally, a Sequence has a set of directions for turning those Shots into a Sequence. There are several formats for this, such as EDL and AAF. OMC abstracts these into a general Sequence Chronology Descriptor (SCD) which has basic timing information about the portions of shots and where in the Sequence they appear. Exact details of how the Sequence is constructed are application-specific, using a format such as an EDL or OpenTimelineIO. An SCD is an OMC Asset, and the application-specific representation is used as the SCD’s functional characteristics.

The SCD allows some visibility into Sequences for applications that may not understand a particular detailed format. It is useful for general planning and tracking, and is another example of OMC making connections that in current productions are manual and easily lost, such as knowing what sequences have to be re-done if a capture is re-shot or a prop is changed.

OMC: Part 2 Context has details on how portions of Shots are specified for adding to a Sequence. This diagram shows the end result at a relatively coarse level of granularity. The Sequence uses the SCD to combine three captured Assets (or portions of those Assets) into a finished representation of Production Scene 2b.

- A Sequence has to be viewable. Often, this means playing it back in an editing tool, but for review and approval, for example, a playable video is needed. In this case, the video is an Asset, connected to the Sequence with a “derived from” relationship.

- A Sequence (or an Asset derived from it) can be used as part of another Sequence.

- In the example above, if a Shot isn’t ready, it can be replaced by part of a Storyboard.

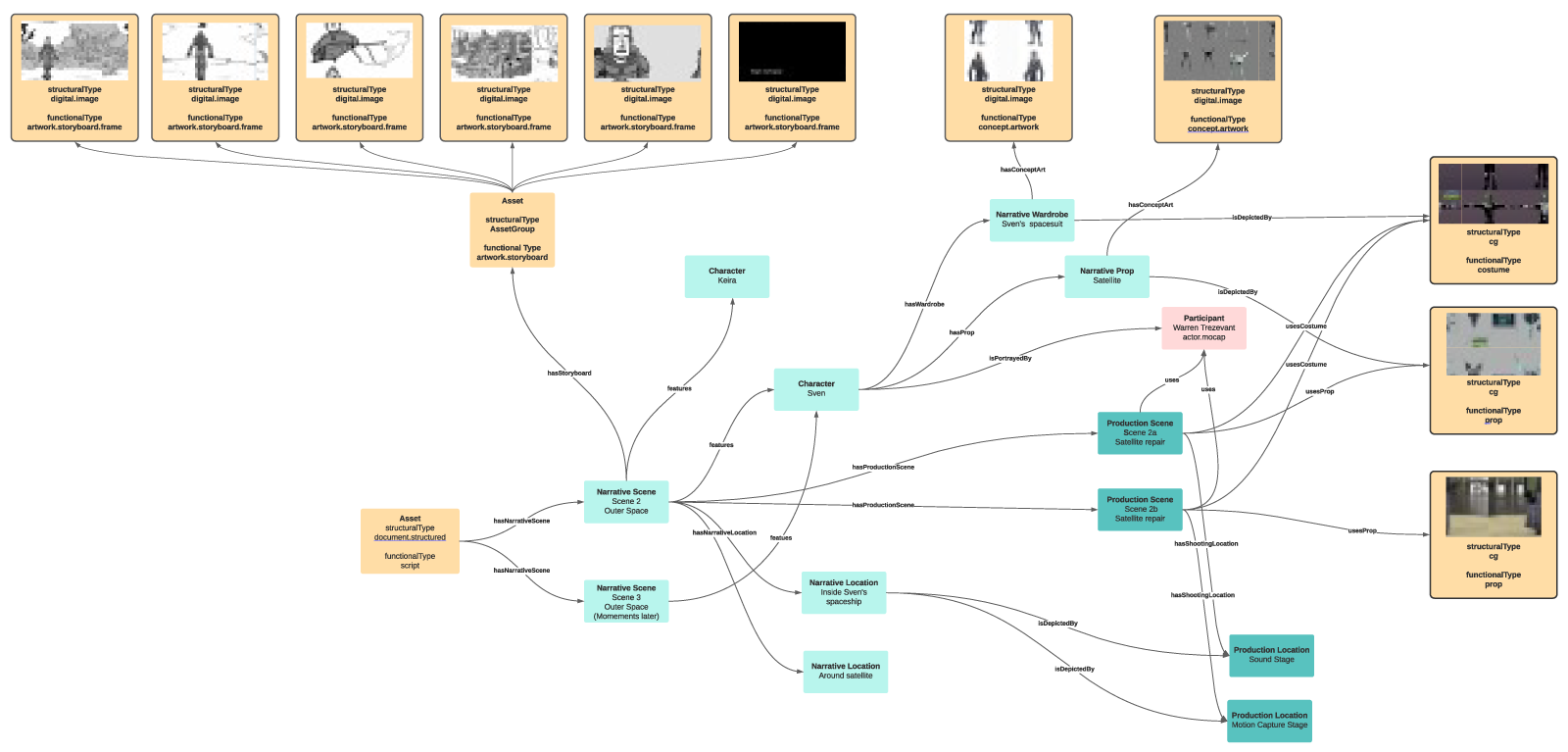

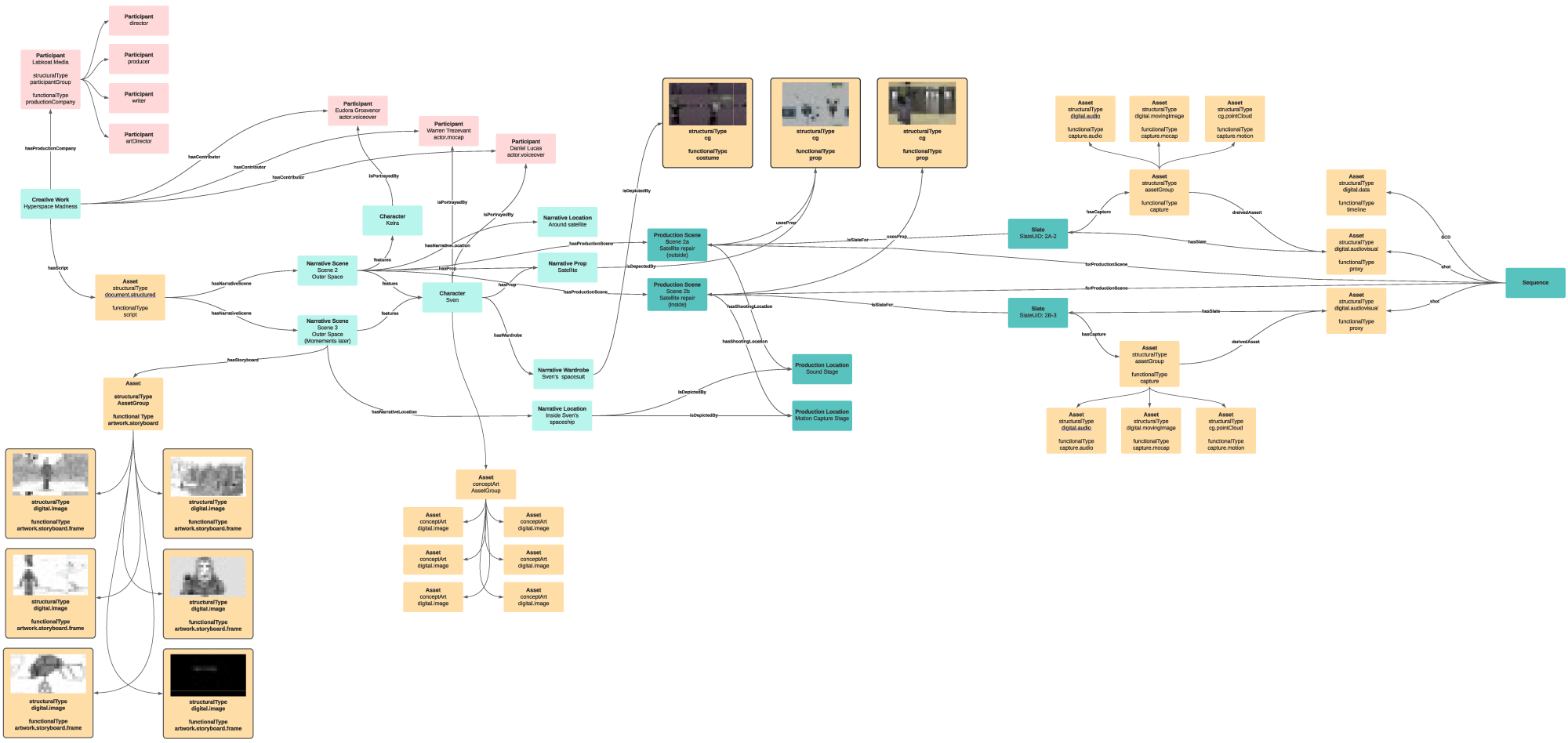

This last diagram shows all of the things we have talked about, in all 3 blogs, in one complete view of the data and relationships. From this, you can see the ripple effect if, for example, the design of the communicator prop changes. This starts with remaking the production prop, carries on through re-filming or re-rendering the production scenes where the communicator is used, and on to the finished sequences for the narrative scene. This visibility into how everything is connected can help reduce unexpected surprises late in the production process.

Conclusion

This blog has shown how to use the Ontology for Media Creation to move from production elements to some filmed content, and concludes this series of blogs on using the OMC in the context of a real production.

Thinking beyond the relatively simple examples in this blog series, which use just a couple of scenes and characters, a major production is not just a logistical and creative challenge but also a massive data wrangling operation. And that data is often the cause of complexity and confusion – at MovieLabs we believe we can help simplify that problem dramatically to allow the creative team to spend their precious resources on being creative.

We believe that there are four main benefits from using OMC in this way:

First, using common, standard terms and data models reduces miscommunication, whether between people or between software-based systems. We explored this in the first blog in this series, and the lessons apply to all the others as well.

Second, being explicit about the connections between elements of the production makes it easier to understand dependencies and the consequence of changes, both of which have an effect on scheduling and budget. We dove into this kind of model in Part 2 and then used it heavily in Part 3, which also demonstrates some concrete applications of the model.

Third, OMC enables a new generation of software and applications. OMC is primarily a way of clarifying communication, and clear machine to machine communication is essential in the distributed and cloud-based world. These new applications we’re expecting will support the broader 2030 Vision and can cover everything from script breakdown and scheduling through to on-set activities, VFX, the editorial process, and archives.

Finally, having consistent data is hugely beneficial for emerging technologies such as machine learning and complex data visualization and we hope therefore the OMC will unlock a wave of innovative new software in our industry to accelerate productions and improve the quality of life for all involved.

These blogs are not theoretical – we have been using the OMC in our own proof-of-concept work where we model real production scenarios and this data connectivity is a vital part in delivering a software defined workflow (for more on Software Defined Workflows, watch this video) where we are exploring efficiency and automation in the production process.

The Ontology for Media Creation is an expanding set of Connected Ontologies – we will continue to add extra definitions, scope and concepts as we broaden the breadth and depth of what it covers, especially as it becomes more operationally deployed. For example, we are currently working on OMC support for versions and variants as well as expanding into new areas of the workflow such as computer graphics assets. In practical terms, the Ontology is available as RDF and JSON, and software developers are working with both. Please let us know if you’d like to try it out in an implementation.

If you found this blog series useful then let us know, and if you’re interested in additional blogs or how-to-guides let us know a specific use case and we can address it (email: info@movielabs.com).

There’s also a wealth of useful information at mc.movielabs.com and movielabs.com/production-technology/ontology-for-media-creation/.